ResNet Architecture: A Comprehensive Guide to Deep Learning Breakthrough

In the world of deep learning, one name that frequently stands out is ResNet Architecture. Whether you are just starting out or diving deep into neural networks, ResNet has proven to be one of the most groundbreaking advancements in image recognition and computer vision. This article is crafted to give you a clear understanding of what ResNet is, how it works, and why it has been so transformative. Buckle up as we explore this revolutionary topic in easy-to-understand terms.

What is ResNet Architecture?

ResNet Architecture, or Residual Network, is a type of neural network designed to tackle one of the biggest challenges in deep learning: the vanishing gradient problem. This architecture was introduced by Microsoft Research in 2015 and has since become a fundamental building block in many computer vision applications.

Before ResNet, training very deep networks was a tough nut to crack. The more layers you added, the harder it became for the model to learn because gradients would get smaller and smaller. ResNet Architecture solves this problem by using “skip connections,” allowing the model to learn better and deeper features, which eventually results in better performance on tasks like image recognition.

The introduction of ResNet marked a huge leap forward, allowing researchers to create networks that are hundreds or even thousands of layers deep without the training issues that came with traditional architectures.

The Vanishing Gradient Problem

Before we jump into how ResNet Architecture works, it’s important to understand the problem it aims to solve: the vanishing gradient problem.

When training deep neural networks, each layer is supposed to learn some aspect of the data and pass on its findings to the next layer. However, as we go deeper, the gradient, which helps adjust the weights, becomes too small to effectively update the earlier layers. This means that deep layers are learning well, but the earlier ones aren’t getting any better. As a result, deeper networks become less efficient.

Why Does ResNet Matter?

The beauty of ResNet Architecture lies in its simplicity and ingenuity. By adding residual blocks, ResNet allows the model to skip one or more layers and pass information directly to further layers. This way, even if a layer doesn’t add much, the network can still learn without getting stuck, effectively bypassing the problem of diminishing gradients.

Here’s why ResNet Architecture is so crucial:

- Solves Vanishing Gradient Problem: By allowing skip connections, ResNet makes sure gradients don’t become too small.

- Supports Deeper Networks: It allows for more layers, resulting in better feature extraction.

- Achieves Higher Accuracy: ResNet has proven to perform better than traditional convolutional neural networks (CNNs).

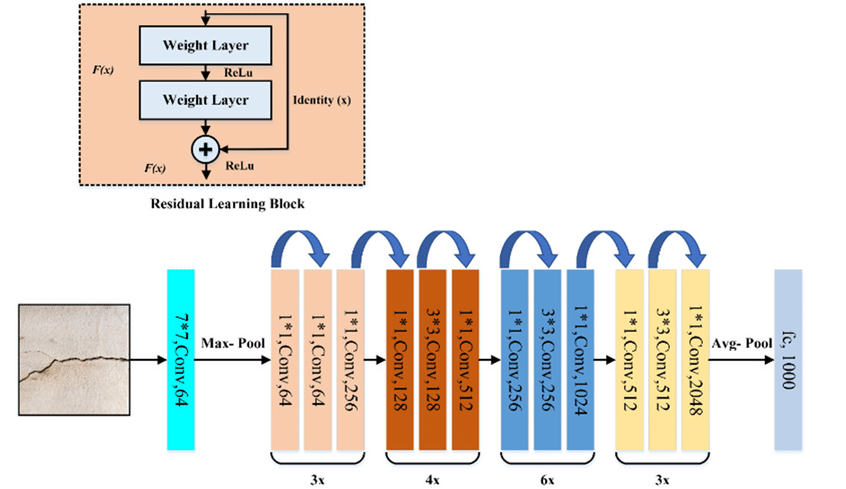

The Concept of Residual Blocks

The key to ResNet Architecture lies in its Residual Blocks. Let’s dive into what these blocks are and how they work.

In traditional neural networks, each layer tries to learn some mapping function, say F(x), from the input x. In ResNet, instead of directly learning F(x), the network learns the residual—or the difference—between the input and the output. This means the network learns:

- H(x) = F(x) + x

The residual block takes the input and adds it directly to the output after it has passed through a few layers. This is what we call a skip connection.

Skip Connections Explained

Skip connections are at the heart of ResNet Architecture. By letting information “skip” certain layers, they allow for smoother gradient flow and prevent layers from getting stuck in a state where they don’t learn anything new. Essentially, they help in making sure that any new layer added doesn’t hurt the learning process of the entire network.

- Identity Mapping: Skip connections in ResNet create a kind of identity mapping where, if the additional layers can’t contribute, the network still retains the initial identity, making it stable.

- Preventing Degradation: The degradation problem—where adding more layers makes performance worse—is also solved with skip connections.

This approach of skipping ensures that the model doesn’t always rely on deeper transformations but instead can maintain a balance between new information and retained features.

The Structure of ResNet Architecture

A basic ResNet Architecture follows a very well-defined structure. Below, we break down its components and layers.

Layer Breakdown

- Input Layer: As with many CNN models, the input image is fed into the network.

- Convolutional Layers: The input goes through several convolutional layers with filters that extract features from the image.

- Batch Normalization: This layer helps in normalizing the outputs to make learning faster and more stable.

- Residual Blocks: The key feature of ResNet, where each block consists of two or three layers that learn residual features.

- Fully Connected Layers: Finally, the processed features are fed into fully connected layers that provide the classification.

Types of ResNet

There are multiple versions of ResNet Architecture, each with a different number of layers:

- ResNet-18: A smaller model with 18 layers, used for less complex tasks.

- ResNet-34: Similar in size, with a few more layers for better accuracy.

- ResNet-50: A popular version, consisting of 50 layers, known for being powerful yet manageable.

- ResNet-101: Contains 101 layers and is used for more complex tasks with high-dimensional data.

- ResNet-152: A deeper version with 152 layers, designed for very sophisticated tasks, showing the real power of deep learning.

The Math Behind ResNet

Now, let’s get a little technical, but I promise not to overwhelm you. In a residual block, the goal is to learn the residual function, which is represented mathematically as:

- H(x) = F(x) + x

- H(x) is the output of the block.

- F(x) is the transformation applied by the layers (convolution, activation, etc.)

- x is the input passed along the skip connection.

This equation shows that even if F(x) becomes zero, H(x) will still carry the identity information from x. This helps make sure that the network is learning effectively at all times, even if deeper layers are initially underperforming.

Applications of ResNet Architecture

One of the main reasons ResNet Architecture is so popular is its versatility. Let’s explore where and how ResNet is applied in real-world problems:

1. Image Classification

ResNet Architecture is widely used in image classification tasks. It was designed and validated using the ImageNet dataset, which is one of the largest and most challenging image classification benchmarks. ResNet has consistently shown state-of-the-art performance in image classification.

2. Object Detection

When combined with other frameworks like Faster R-CNN, ResNet serves as the backbone model for extracting key features used to identify and locate objects in images.

3. Medical Imaging

In medical diagnostics, accurate identification of tumors, cells, and other anomalies is crucial. ResNet Architecture helps doctors analyze X-rays, MRIs, and CT scans with a high degree of precision.

4. Face Recognition

ResNet is also commonly used in facial recognition applications, where deep features of human faces are extracted and used to identify individuals.

How ResNet Revolutionized Deep Learning

The impact of ResNet Architecture goes beyond just creating deep models. Its contributions to the field of machine learning are long-lasting.

- Building Deeper Models: Before ResNet, training very deep models was a headache. ResNet Architecture made it possible to build networks with over 1000 layers, helping researchers extract intricate patterns in complex data.

- Inspiring New Architectures: After ResNet, newer architectures like DenseNet, Inception-ResNet, and others started to appear, building upon the success of residual connections.

- Wide Adoption: ResNet is used by major tech companies and researchers across the globe, pushing advancements in fields like robotics, computer vision, and even video analysis.

Comparison with Other Architectures

How does ResNet Architecture stack up against other deep learning architectures? Let’s do a quick comparison.

| Feature | ResNet | VGG | Inception |

|---|---|---|---|

| Depth | Very deep (up to 152+ layers) | Moderate (16-19 layers) | Deep, with mixed depth |

| Training Complexity | Moderate, due to skip connections | High | Moderate |

| Accuracy | Very high | High | High |

| Key Feature | Residual Blocks | Simple, linear layers | Inception Modules |

From the table, it’s clear that ResNet Architecture holds an edge in terms of depth and accuracy, thanks to its innovative approach of using residual blocks.

Pros and Cons of ResNet Architecture

Like any technology, ResNet Architecture comes with its own set of advantages and disadvantages.

Pros

- Easier to Train Deep Networks: By using skip connections, ResNet makes training deeper models simpler.

- State-of-the-art Performance: It consistently delivers excellent accuracy in computer vision tasks.

- Flexible: ResNet has different versions (like ResNet-50, ResNet-101) that can be used depending on the task’s complexity.

Cons

- Computational Cost: Training very deep models like ResNet-152 can be computationally expensive and require more resources.

- Complexity of Implementation: While not overly complicated, the concept of residual learning and designing skip connections can be difficult to grasp initially for beginners.

Practical Tips for Using ResNet Architecture

If you’re interested in implementing ResNet Architecture in your projects, here are a few practical tips:

- Start Small: For simpler tasks, start with ResNet-18 or ResNet-34, as they are faster to train and require fewer resources.

- Pre-trained Models: Many libraries offer pre-trained ResNet models. Use these as a starting point, especially if you’re working on image classification.

- Use Batch Normalization: This is an essential step for improving training stability and convergence in deep networks like ResNet.

- Fine-Tune with Caution: When using a pre-trained ResNet, fine-tuning only the last few layers can often yield better performance than retraining the entire network from scratch.

Conclusion: The Lasting Impact of ResNet Architecture

ResNet Architecture has changed the way we think about deep learning. By solving the vanishing gradient problem, it enabled networks to become deeper and more effective without suffering from common pitfalls. The introduction of residual blocks was a game-changer, allowing information to flow smoothly across layers, which led to new levels of accuracy and efficiency in computer vision tasks.

Whether it’s image classification, object detection, or medical imaging, ResNet Architecture has proven itself to be a versatile and powerful tool in a wide range of applications. Its influence extends far beyond the original paper, inspiring numerous other architectures and techniques in deep learning.

To sum it all up, the journey from simple feed-forward networks to ResNet Architecture represents a crucial milestone in the development of artificial intelligence. With its simple yet elegant solution to deep learning challenges, ResNet has indeed paved the way for future innovations, making it an indispensable tool for anyone diving into the world of neural networks.

I hope this comprehensive guide helps you better understand ResNet Architecture and its significance. If you have more questions or want to dive into specific implementations or case studies, feel free to reach out. Deep learning is a fascinating field, and there’s always more to explore!

Post Comment